Hi,

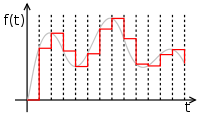

I’m working on a reverb effect, which is based off a FDN reverb by Sean Costello. When making bigger changes to the delay tap, it creates a “bitcrush”-like sound, which is undesirable (in this case). I’ve tried smoothing this out using tonek or port, but it still is very noticable.

Does anyone have any tips on how I could improve this? Perhaps there are any other delay-opcodes that are more suitable for this?

Here is a simplified example of the issue that can be exported from Cabbage and opened in a DAW:

<Cabbage>

form caption("DelayNoise") size(200, 200), pluginId("DLNS"), guiMode("queue"), presetIgnore(1)

rslider bounds(36, 26, 60, 60), channel("Modulation") range(0, 1, 1, 1, 0.001), text("Modulation") textColour(0, 0, 0, 255)

rslider bounds(114, 28, 60, 60), channel("Mix") range(0, 1, 1, 1, 0.001), text("Mix") textColour(0, 0, 0, 255)

rslider bounds(78, 96, 60, 60), channel("Diffusion") range(0.01, 1, 1, 1, 0.01), text("Diffusion") textColour(0, 0, 0, 255)

</Cabbage>

<CsoundSynthesizer>

<CsOptions>

-n -d -+rtmidi=NULL -M0 -m0d

</CsOptions>

<CsInstruments>

ksmps = 32

nchnls = 2

0dbfs = 1

instr 1

kmix, kmix_Trig cabbageGetValue "Mix"

kpitchmod, kpitchmod_Trig cabbageGetValue "Modulation"

kdiff, kdiff_Trig cabbageGetValue "Diffusion"

kdiff port kdiff, 0.01

;kdiff tonek kdiff, 10

a1 inch 1

kdel1 = ((3007/kdiff)/sr)

k1 randi .001, 3.1, .06

adum1 delayr 1

adel1 deltapi kdel1 + k1 * kpitchmod

delayw a1

asigXfadeMix = a1 * sqrt(1-kmix) + adel1 * sqrt(kmix)

outs asigXfadeMix, asigXfadeMix

endin

</CsInstruments>

<CsScore>

i1 0 [60*60*24*7]

</CsScore>

</CsoundSynthesizer>

. However, I came up with a solution today that is inspired by your muting suggestion:

. However, I came up with a solution today that is inspired by your muting suggestion: